Do you see the elephant in this picture? This is not so easy for a computer, even if it is "intelligent".

We encounter applications of machine learning almost every day, self-driving cars or boats being an example. Or the amazing drone show in Amsterdam last August! A very strong and important tool used in machine learning are neural networks. In this article I want to present some ideas from two recent articles on neural networks, omitting the technical details. The first article is an interview with Professor Lambert Schomaker, professor of artificial intelligence at the university of Groningen, [1]. The second is an article about computer vision and neural networks that appeared recently in Quanta Magazine [2].

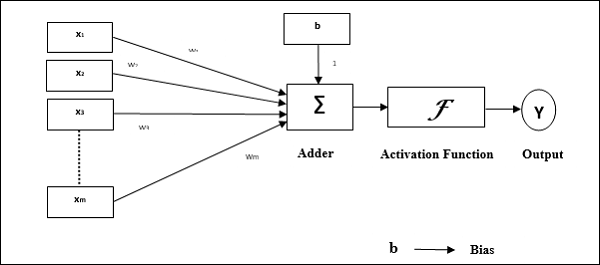

Let met start with some historic facts, the first results on machine learning appeared around 1940 from D.O. Hebb, who created a learning hypothesis based on the mechanism of neural plasticity. A major breakthrough came in 1958 when F. Rosenblatt created the perceptron, an algorithm for pattern recognition. The perceptron is the fist mathematical model of a neural network, and was the heart of the first wave of neural networks. The high expectations were maybe compensated by the restrictions the perceptron appeared to have. Around 1970 the mathematician M. Minsky proved that such a perceptron has restrictions regarding the types of problems it can deal with. This led to the second wave around 1975, more sophisticated neural networks were constructed which could handle more complicated problems. Finally, from 2005 and until today we speak of the third wave, with many new ideas and methods, like deep learning for example.

A perceptron, introduced by Rosenblatt.

How does the scenery look like today?

In July I had a very interesting and inspiring discussion with Prof. dr. Lambert Schomaker. The full interview can be found in Nieuw Archief voor Wiskunde (special issue on machine learning) [1]. Among various other things, we discussed modern techniques of machine learning and some major concerns around them. A concern that is often voiced is that a neural network behaves like a "black box", and is hence not so trustworthy. There is some truth in this statement, in the sense that we can't explicitly describe how the network works internally. But, in practice, it is more important to have solid and strong machinery to statistically analyze the output of the neural network. And there has been a lot of research done in this direction with remarkable results [1]! Furthermore, what I realized during the interview is that we use such implicit methods every day! A striking example is the blood pressure measurement, it is a non-invasive empirical method that we feel confident with.

A second point I would like to discuss concerns the current status and the future challenges in machine learning. Nowadays neural networks can perform amazingly well when there is sufficient data to train the network. A very interesting example is computer chess. We had AlphaGo, which became in 2015 the first computer Go program to beat a human professional Go player. But AlphaGo was trained using already played expert games! "This is no artificial intelligence. It is intelligence by proxy", aldus Schomaker. They picked this up by DeepMind and they created AlphaGo Zero which was trained only using the rules of the game and letting it play against itself millions of times. But even in AlphaGo Zero, the learning process is static, the neural network is trained, but does not keep learning during the actual game! This feature of dynamic learning is often missing when neural networks are used in practice, and that may be one of the reasons they perform poorly sometimes when new data arrives from the outside world.

A result which is different in nature but similar in spirit, was illustrated in a recent article in Quanta Magazine. The experiment and the result concern computer vision. The experiment is the following: a computer needs to identify all the objects in a living room. The computer succeeded in identifying all the objects! But when you add an elephant in the image of the living room, then things get tricky. The computer even failed to correctly identify the objects it could identify before, a couch gets misidentified as a chair!

“It’s a clever and important study that reminds us that ‘deep learning’ isn’t really that deep...”[2]

Researchers are still trying to understand exactly why computer vision systems get tripped up so easily, but they have a good guess. It has to do with an ability humans have that AI lacks: the ability to understand when a scene is confusing and thus go back for a second glance [2]. When we see a picture with an elephant in a living room it may seem weird but in the end it is just an elephant in a living room. Our neural network has much more flexibility and does not collapse when something is confusing.

[1] "Het gaat nu echt snel", Nieuw Archive voor Wiskunde, September 2018.

[2] Machine Learning Confronts the Elephant in the Room, Quantamagazine

The featured photo is borrowed from chartec.net.