How the future of computing might be analog

A car, a home, and a wristwatch, all of them seem to be “smart” today. This intelligence runs on computing, which lately made the headlines for being scarce to obtain. The reasons are manyfold, including global supply chains being interrupted by covid and a cargo chip attempting a drift in the Suez Canal, but there is also a more fundamental problem: the growth rates for computing demand and capacity are facing different directions.

So this story has two sides to it: the demand and the capacity, and they are at odds. For us a good reason to take a look at what is so computing hungry in the modern world (Paragraph Computing demand) and why the advances in building computers can’t keep up (Paragraph Computing capacity). This problem touches on such a central issue of modern technology, causing many researchers to try and find new ways to devise computers. In that, they take inspiration from nature (Paragraph Brain-inspired computing). One specific direction of this research is computing with light, called Neuromorphic photonics.

Computing demand

The demand for computing does not seem to go anywhere but up. Everywhere large amounts of data are captured and processed. Examples include businesses, scientific institutions, self-driving cars, and governments. Take for example self-driving cars: they constantly take all sorts of measurements of the environment and those measurements need to be processed right away by an algorithm to make decisions about the car’s behavior.

Such an algorithm can certainly not be very simple, many things need to be taken into account. Even if a road is well known to the algorithm, there can always be random events - like a child chasing after a ball - and the algorithm should deviate from the previous decision made for that road. The algorithms used to evaluate the data of the car’s sensors are therefore of a quite complicated nature: Usually when one thinks of an algorithm, one thinks of the “if X, then Y” kind, like in a recipe for baking. The algorithms evaluating the car’s environment are inspired by the information processing of the brain and fall under the umbrella of machine learning.

The name may be a bit too ambitious: machines are not really able to learn as a human does. In Machine Learning a lot of data and computing power is used to find a set of parameters, for which desired outcomes are obtained. The amount of parameters influencing the output is staggering. And here we attach back to the original story: The machine learning algorithms - that are used to solve those complicated problems for which it is hard to write algorithms of the classical nature – require even more computing capacity.

Advances in the quality of learning machines also seem to only come with larger - and thus more computing hungry – machines. All the reason to turn out attention to the following.

Computing capacity

Back in the 1960s, when NASA conducted their moon mission they used a computer for their calculations that took up a building and had less computing power than a modern pocket calculator. That such computing power now fits in a pocket is due to steady advances in technology, that doubled the number of transistors per chip roughly every two years. This doubling held up for most of the second half of the 20th century and the first decade of the current century. Because this lasted as if it was a law of nature, is often referred to as “Moore’s law”. According to this law computing capacity doubled every two years as well.

The doubling trend however started to decline and is anticipated to stop in 2025. Before 2006, it used to be the case that performance per watt doubled every two years, but since 2006 further shrinking chips leads to current leaking and chips to heat up, thus requiring more power for advances in computing capacity.

However, the computing performance still grows by the rate of Moore’s law (but not by Moore’s law, so not by a doubling of transistors per area) utilizing so-called parallel computing, at the cost of power consumption.

Parallel computing refers to distributing tasks to multiple computing kernels, speeding up the task. The parallelism however means sending a lot of data all over the place, that is, it requires a large throughput, which is also subject to physical limitations soon to be met. (There are also other - efficiency related - restrictions in association with parallel computing. Luckily there is an extra article on the NETWORK pages! How parallel computing can be inefficient).

Brain-inspired computers

Interestingly there is a computing unit defying the aforementioned scaling laws and it is extremely good at learning tasks:

the brain!

Modern-day supercomputers just reached computing powers similar to the brain, while at the same time requiring some 8 orders of magnitude more power than the brain.

In light of this stark difference, it seems to be a good idea to look into changing the computing approach altogether and explore brain-inspired computing. To get an idea of what that might be, let us first look at regular computers.

Famously, they represent data as sequences of ones and zeros, this is what is meant when the term digital is used. They have a Central Processing Unit (CPU), which is where a certain set of instructions is executed: strings of ones and zeros are modified. These stings are not stored on the CPU (it can only do the logic), they come from separated memory. The setup of a CPU with separated memory is called the von Neumann architecture. It is well suited for the above-mentioned serialized operations of traditional programming (“if X, do Y”). It is also very different from the information processing of the brain.

In the brain, many interconnected neurons send and receive pulses from each other. Those pulses are released once a certain threshold is reached – they spike. This way of calculating is approximated in computer science by so-called (artificial) spiking neural networks. Machines build upon this architecture are more energy efficient and closer to actual brains, but they are not able to achieve the quality of results of state-of-the-art-technology.

The best performing machine learning models are the so-called Deep Neural Networks (DNNs). They can send any value, that is every number on the real number line, to other neurons. The spikes become smooth signals. Machines build for DNNs are less brain-like, but they are still brain-inspired. We will take a look at neuromorphic photonics.

Neuromorphic photonics

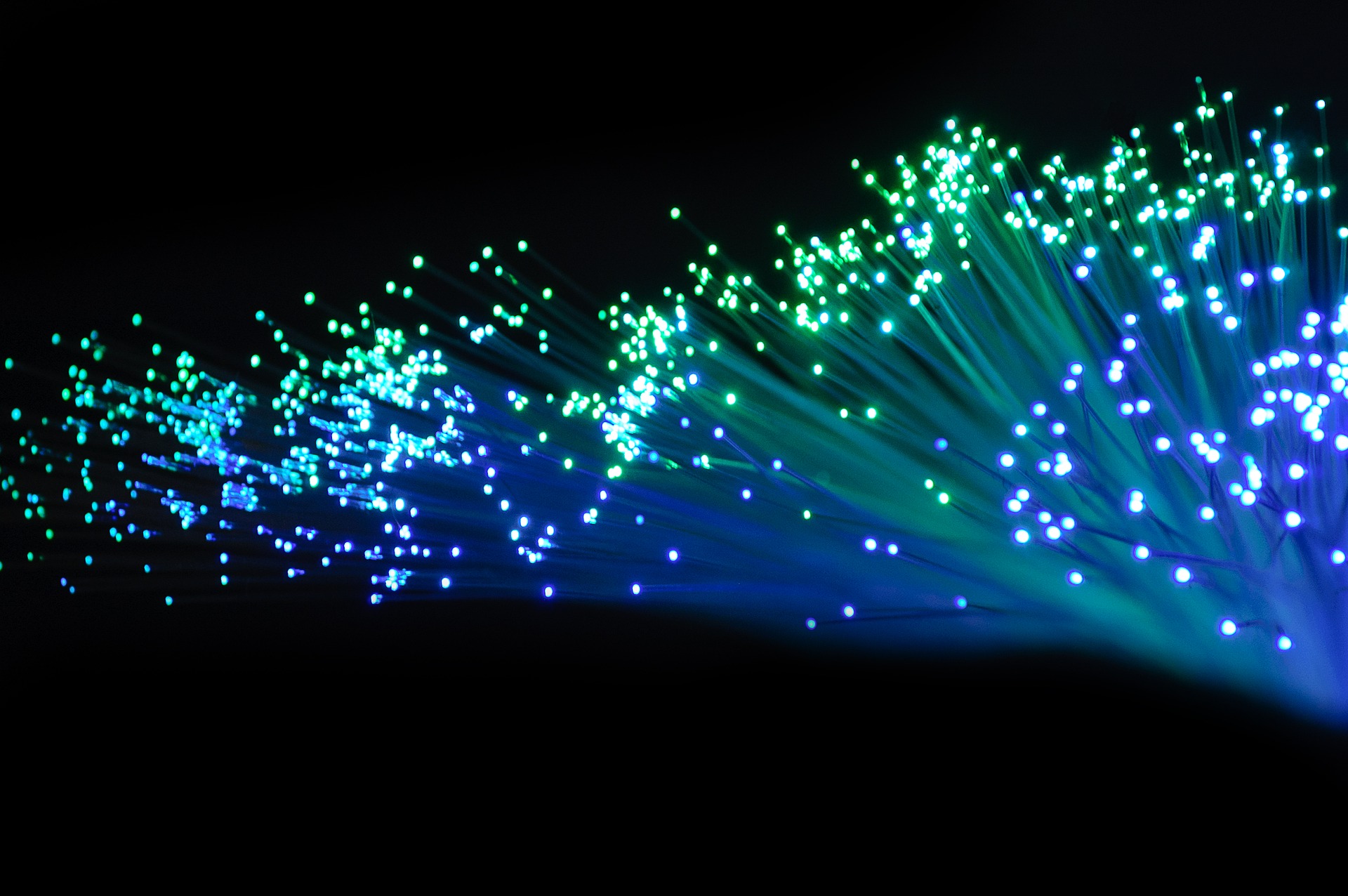

Photonics, which is the application of light in technical systems, is considered a contestant for neuromorphic applications because light offers an enormous bandwidth while being energy efficient. One can transport information through waves, for example through the amplitude. If an amplitude is set to correspond to a certain numerical value, then a wave with twice the amplitude transports double the value. The amplitudes can assume any value and thus any number is representable. This is what one refers to as analog.

Besides digital electronics (signal or no signal), analog electronics (representing values through charge or voltage) as a platform for neuromorphic computing has been demonstrated, but achieving the necessary throughput/bandwidth with metal wiring is a challenge.

On the other hand, light has naturally an enormous bandwidth, while acting with the speed of light and being highly energy efficient, as moving light takes no energy (unlike moving electrons). All of this makes photonics a prime candidate for neuromorphic computing.

But there are still major challenges ahead. Neural Networks are so powerful through the combination of linear actions (matrix-vector multiplication) and non-linear functions. Especially for linear actions, photonics is an excellent tool, but implementing the non-linear functions is more challenging. Challenges from other neuromorphic approaches also carry over, like accessing and storing in memory (ideally on-chip) and noise sources that all analog platforms are susceptible to.

These challenges lie at the heart of modern research in this direction. Photonics has grown tremendously during the last decades and offers an even brighter future, as Professor Ton Koonen wrote in his valedictory lecture: Light works!

The featured image is taken from Андрей Баклан via Pixabay