Usually, after a crisis follows a period of reflection, and everyone agrees that one should do anything possible to avoid a new crisis.

One of the lessons learned after the financial crisis of 2008, with Lehman Brothers collapsing, causing instability in the global economy, was that there is a strong need to better model the underlying financial network in an economy as a whole. In such a financial network links represent financial transactions between entities, like companies and banks. More precisely: to assess the vulnerability of an economic system, it is clearly not sufficient to have a handle on the risk of each of the individual companies defaulting, but one should rather have a sound description of the interlinkages between all relevant economic entities. The key concept in this context is systemic risk, the collective risk of a system of interacting agents, quantifying the resilience to financial shocks.

Also, in The Netherlands, it was recognised that an integrated approach was needed to tackle this problem, with the input of various stakeholders being crucial. A few years ago, a meeting was organised at the headquarters of Statistics Netherlands (CBS) in The Hague where all relevant parties were present. There were representatives of the large Dutch banks, who wish to quantify the systemic risk of their portfolio more accurately. The clients of these banks have all sorts of mutual interlinkages, through loans or transactions for example, which must bebrought into the equation. In the second place, an expert from the Dutch National Bank was there. One of the national banks' primary tasks is to protect the country's economic stability, which is why they monitor the various performance indicators of the country's financial institutions. CBS offers both a wealth of data on the Dutch company network, and expertise in network modelling. And then there were representatives from Dutch academic research institutes, bringing in expertise in state-of-the-art tools for mathematical network analysis. I think it was a good sign that I was asked to be present at the meeting, representing NETWORKS!

In terms of the mathematics of systemic risk, various approaches have been developed. A very powerful technique is based on ideas from econophysics, a research discipline in which theories and methods originally developed in physics are applied to solve problems in economics. In our context, it means that the system of interacting economic agents is modeled using tools from statistical physics. A practical issue that needs to be resolved is that, due to privacy issues, only partial information on the corresponding network is available. With techniques from econophysics, one can reconstruct the network (you can have a look at this article written by Diego Garlaschelli and Tiziano Squartini for a more detailed overview of this field), in it allows the generation of ensembles of directed weighted networks that are probabilistically consistent with the data available. It concretely means that when sampling such networks, one can estimate any network metric, in particular metrics that provide insight into the network’s resilience.

And this is where the expertise from NETWORKS enters. Systemic risk manifests itself through the presence of relatively many triangles in the underlying graph representing financial relations between entities, given the number of links. The assessment of the likelihood of such scenarios has been an important research line in NETWORKS. In projects that were initiated at Leiden University, but in which also researchers from the University of Amsterdam participate, constrained random graphs were studied, shedding light on the most likely realisation conditional on, say, the graph having a specific number of edges and triangles. To describe the rare-event behaviour of random graphs, deep mathematical theory comes to our rescue here, relying on the mathematical concept of graphons. In NETWORKS we have extended this body of work, which was co-initiated by Abel Prize winners Raghu Varadhan and László Lovász, to random graph models that evolve over time, also to cope with the situation of network information becoming available as time evolves. It is great to notice that all sorts of sophisticated ideas and results from our program, slowly trickle down into practically applicable concepts!

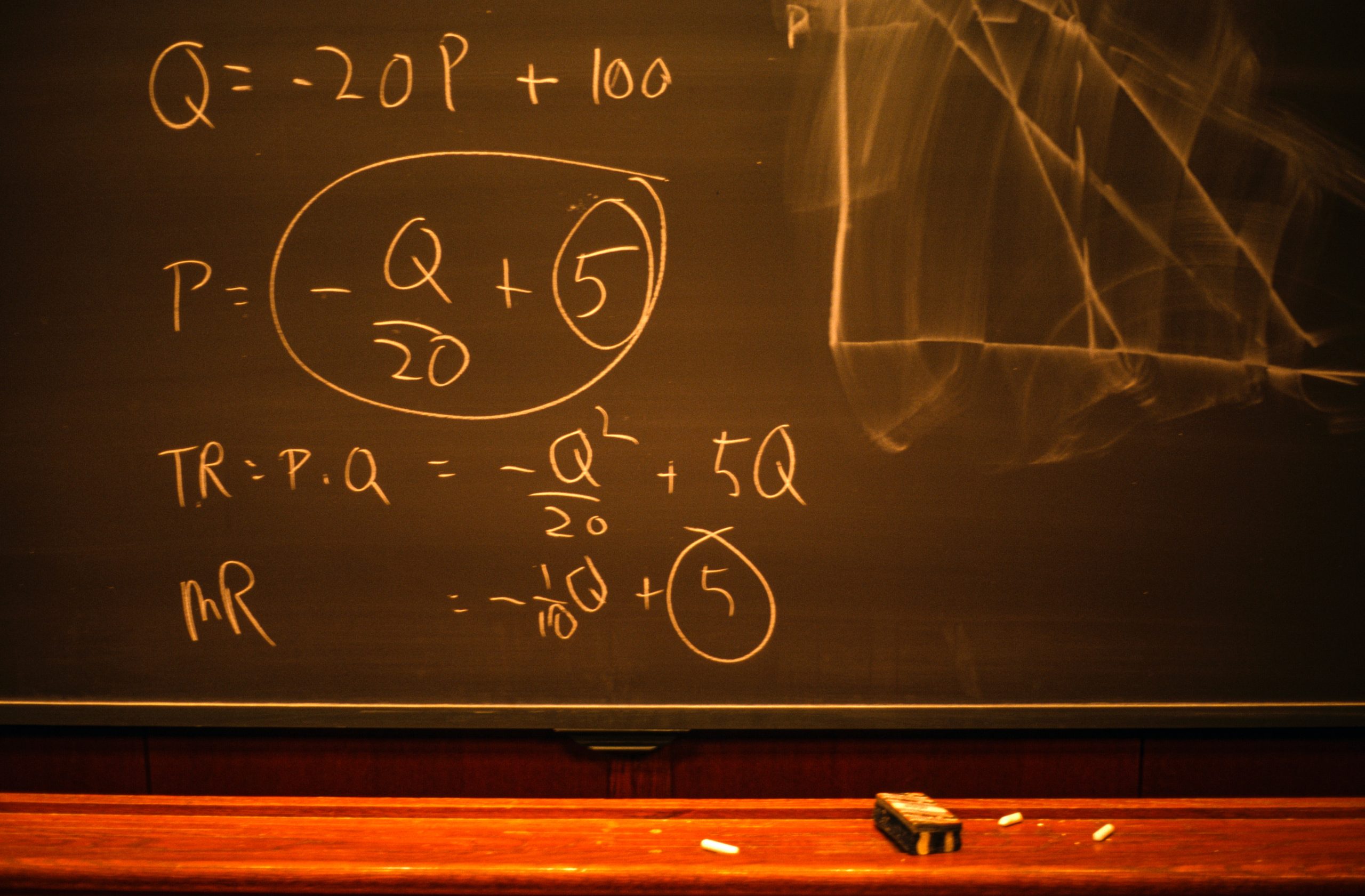

Featured image by Mick Haupt from Unsplash.