Alfred Aho and Jeffrey Ullman received the 2020 A.M Turing Award for their contributions to computer science. Let’s explore how their contributions have shaped the principles, techniques and tools for writing compilers.

Alfred Aho and Jeffrey Ullman received the 2020 A.M Turing Award for their contributions to computers science. What are their contributions and what has been their impact?

Aho and Ullman developed (in most cases together and sometimes in cooperation with others) efficient algorithms for string pattern matching, and for parsing, analyzing and translating computer programs. These algorithms form the foundation for a plethora of tools that are still in use today. Examples are grep, lex, yacc, awk and many more.

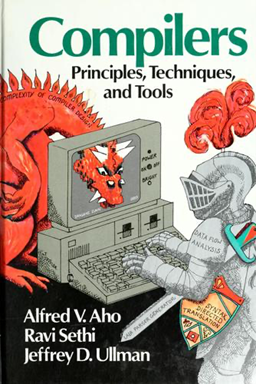

Together they produced a dozen of monumental text books that have inspired and educated generations including The Theory of Parsing, Translation and Compiling, Two Volumes (1972, 1973), The Design and Analysis of Computer Algorithms (1974), Compilers: Principles, Techniques, and Tools (1986), and Foundations of Computer Science (1992). As a personal note: the first two-volume book above hooked me to the topic of parsing and parser generation and is still my goto book to answer many questions.

Working separately from each other they also have produced an impressive amount of text books and articles. For instance, Ullman (co-)authored several text books on database systems, automata theory and formal languages.

Copyright: this image can be found widely on many websites; This version is from openlibrary.org.

All the above mentioned topics are numerous and important contributions to computer science but they are part of a larger picture. The overarching view is that many of their contributions nicely fit in, and were intended for, the global architecture of a compiler. A compiler is among the most complicated software systems around and the “dragon” mentioned in the title refers to them and is based on the cover of their compiler book Compilers: Principles, Techniques, and Tools (the covers vary per edition, this is from the 1986 edition, colloquially called the “red dragon book”). A dragon is showing its ugly head through a computer screen and a knight is trying to conquer it with various labelled tools: a “LALR parser generator” sword, a “Syntax directed translation” shield, and “Data flow analysis” armor.

The Dragons

Before we dive into some details, let’s first explore what a compiler is. Suppose you have written a program that counts the number of cycles in a graph. Different programming languages are implemented differently, but the general gist is that your cycle counting program will be given as input to a compiler and out comes an executable version of the program that can be executed. The executable version will probably be in the form of native machine code instructions.

How does a compiler achieve this feat? A compiler is usually divided in several separate phases:

- A lexical scanner breaks up the input text into separate tokens. For instance, x=3 will be split into the tokens x, = and 3.

- This stream of tokens is fed into a parser to determine their syntactic structure. Continuing our example, the parser determines that we are dealing with an assignment statement consisting of an identifier x followed by an assignment symbol =, followed by an expression of the form 3. As a side effect of this recognition step, it also produces a tree structure to represent this assignment: the syntax tree.

- The syntax tree is used for further analysis, such as:

- Are all variables properly declared?

- Are all expressions type correct?

- Are all functions called with proper arguments?

- The final phase is devoted to the syntax-directed translation of the syntax tree to machine instructions. Usually this is done in several phases that involve more intermediate, lower level, representations of the original program.

All these phases can and probably will use algorithms and techniques invented (or at least popularized) by Aho and Ullman.

Lexical Scanner

The lexical scanner will use some form of text pattern matching involving finite automata. The idea is to use regular expressions to describe the desired lexical patterns (for instance, an identifier, a number, and white space and comment conventions) and then translate these regular expressions to optimized finite automata that can do very efficient lexical scanning. The template here is that a lexical scanner generator takes regular expressions as input and generates a lexical scanner. The above mentioned lex tool is an example of this approach.

Parser

A parser can be created in many ways. The most straightforward but also the most laborious approach is to write a parser by hand. Read token after token and build a syntax tree each time a syntactic construct is recognized. A grammar for an average programming language easily defines hundreds to thousands of different syntactic constructs resulting in hundreds to thousands of manually written functions to parse these constructs. However, a manually written parser has one major advantage: it can rather easily be extended in such a way that excellent error recovery and error reporting are supported. This makes a huge difference in usability: from only reporting a syntax error and halting the parser to reporting the erroneous part, skipping it and continuing the parse to find more errors.

For parsers, a similar template exists as for lexical scanners: use a parser generator that takes a grammar as input and generates a parser for that grammar. But this is easier said than done and requires the help of the theory of formal languages. Management summary: grammars can be subdivided in different classes and the complexity of parser generation differs per grammar class.

Grammar

A grammar is a set of rules that describes a language. Typically a grammar defines terminals (literal text, also called tokens) and non-terminals (symbols defined by the grammar that need further expansion) and rules that establish the relation between the two. For instance, in the grammar

L := a

L := b

L := c L c

L is a non-terminal and a, b and c are the terminals. L is defined by three rules which together define an infinite language (let’s call it L) of sentences: a, b, cac, cbc, ccacc, ccbcc, and so.

Where a grammar defines a possibly infinite set of sentences, parsing does the reverse: given a grammar and a sentence determine whether the sentence is in the language defined by the grammar. Continuing the above example:

a is in L because of the rule L := a.

cac is in L, because of the rule L := c L c, provided that a is in L. It is as shown in the previous step.

etc.

A parser recognizes if a sentence is in a given language or not and it also builds a syntax tree that records the steps needed to derive that sentence from the grammar. A formidable problem is how to do this parsing efficiently and there are many solutions for it, LALR(1) is one of them. A typical grammar rule for the assignment statement in some programming language might be

STATEMENT := ID = EXPRESSION

Examples are:

x = 1

count = count + 1

This rule for assignment is called a syntactic construct of the language. So-called meta-programming languages combine the features and techniques described here and are particularly suited for compiler writing. One example is the Rascal meta-programming language.

Aho and Ullman introduced the grammar class LALR(1) which stands for Look Ahead Left to Right with 1 token look ahead. Parser generation for LALR(1) grammars is itself efficient and also results in efficient parsers. The above mentioned yacc tool is an example of this approach.

Flow of information

Data flow analysis and syntax-directed translation had already been around as ideas but they were refined and popularized by Aho and Ullman.

Data flow

Data flow analysis is concerned with the flow of information through a program. It basically amounts to building a graph that represents all control structures and connects all uses of variables to their definitions. Once this graph is available, graph algorithms are used to answer questions like:

- Is the value of this variable ever used? If not, remove it.

- Is this code ever executed? If not remove it.

- What is the last code point where the value of this variable is used? After that code point, the location where the value of the variable is stored can be reused.

- Can these two statements be interchanged without affected the answer of the computation? This can enable certain optimizations.

Syntax directed translation

Syntax directed translation is a computational model to organize the translation from syntax tree to machine code and amounts to organizing the translation steps per grammar rule. Syntax directed translation exists in various forms and in most cases top-down and bottom-up information flow between different translation rules is supported. Syntax directed translation is based on a traversal of the syntax tree. In a top down traversal declaration information (e.g., the formal parameters of the function currently being visited) can be propagated downwards to inner syntactic constructs. In a bottom up traversal the code generated for individual syntax constructs can be propagated upwards so that at the root of the tree all code fragments can be combined into code for the complete program.

Wrap up

Here we have it: efficient generation of lexical scanners and parsers combined with data flow analysis and syntax-directed translation. Hundreds of compilers have been built using these techniques. A solid foundation laid by Aho and Ullman that will help to slay the compiler dragon for years to come.