Often there are different strategies to perform a given task - or “to solve a given problem”, as it is usually called - leading to different algorithms. These algorithms typically differ in efficiency: some are fast while others are slow. Of course the actual time a computer needs to solve a problem depends on the speed of the computer. Moreover, it depends on the size of the problem at hand: sorting a thousand numbers will take less time than sorting a million numbers. It is nevertheless possible to compare the efficiency of algorithms in a mathematical way. To this end the number of “steps” of the algorithm is analyzed as a function of the number of input elements.

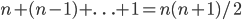

For instance, consider the algorithm described in Basic Notions - Algorithms for creating a sorted stack of cards. Suppose the input consists of  cards. To find the smallest numbered card we look at all

cards. To find the smallest numbered card we look at all  cards, to find the next smallest card we look at the remaining

cards, to find the next smallest card we look at the remaining  cards, and so on. Thus in total we perform

cards, and so on. Thus in total we perform  steps. The exact number depends on what we consider to be a “step”, but the important fact is that the number of steps grows quadratically in the number of cards to be sorted. In technical terms: the running time of the algorithm is

steps. The exact number depends on what we consider to be a “step”, but the important fact is that the number of steps grows quadratically in the number of cards to be sorted. In technical terms: the running time of the algorithm is  . If the running time of an algorithm grows quadratically, it will take four times as long when the input doubles in size. An algorithm whose running time grows linearly - we write: the running time is

. If the running time of an algorithm grows quadratically, it will take four times as long when the input doubles in size. An algorithm whose running time grows linearly - we write: the running time is  - would only take twice as long when the input size doubles. Thus linear-time algorithms are much faster than quadratic-time algorithms for large inputs.

- would only take twice as long when the input size doubles. Thus linear-time algorithms are much faster than quadratic-time algorithms for large inputs.

An important distinction between algorithms is whether their running time grows polynomially in the input size  such as

such as  or

or  , or exponentially such as

, or exponentially such as  or

or  . The running time of the latter type of algorithms grows so quickly that they are basically useless, unless the input size is very small. Suppose, for instance, that we have an algorithm that performs

. The running time of the latter type of algorithms grows so quickly that they are basically useless, unless the input size is very small. Suppose, for instance, that we have an algorithm that performs  steps on an input of size

steps on an input of size  , and that we have a computer that can do 1 billion steps per second. Then the algorithm would take only roughly 1 second on an input of size 30. However, on an input of size 50 it would already take over 13 days, and on an input of size 60 it would take 36 years... Buying a faster computer is not going to help you much. What you should try instead is to come up with a more efficient algorithm. Trying to find the most efficient algorithm for various computational problems is a lively and important branch of theoretical computer science.

, and that we have a computer that can do 1 billion steps per second. Then the algorithm would take only roughly 1 second on an input of size 30. However, on an input of size 50 it would already take over 13 days, and on an input of size 60 it would take 36 years... Buying a faster computer is not going to help you much. What you should try instead is to come up with a more efficient algorithm. Trying to find the most efficient algorithm for various computational problems is a lively and important branch of theoretical computer science.